Image-generation algorithms are recognised to generate sexist bias. The algorithms are also picking racial biases.

Artificial intelligence (AI) is often termed to be the future of growth, and many companies globally are investing in machines that can ‘think’ more like humans. Amazing! Isn’t it? But how would you react if I say that artificial intelligence can too be sexist? Today’s artificial intelligence can auto-complete a photo of someone’s face, predicting the rest of their body and surprisingly, artificial intelligence now seems to assume that women just don’t like wearing clothes.

The above statement that I made is just a summary of the conclusions of a new research study on image-generation algorithms that auto-complete the body of a person when shown a face. According to the study, researchers when fed the pictures of a man cropped below his neck, it was almost 43 percent of the time the image was autocompleted with the man wearing a suit. While on the other hand, when the same activity was done with a woman’s picture, it was found that the image was autocompleted with her wearing a low-cut top or bikini a massive 53 percent of the time.

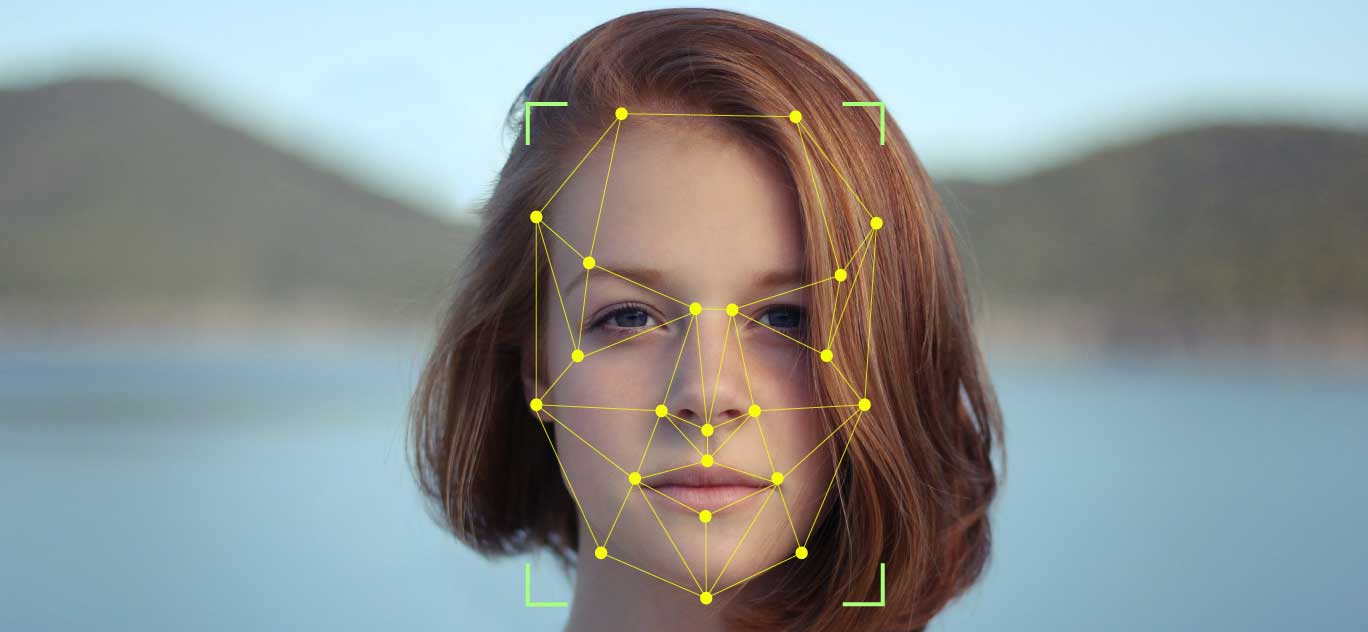

Bias in facial recognition is problematic:

It is not the first incidence when facial recognition algorithms have shown such biases. Facial recognition algorithms have the past of frequently misidentifying people with darker skin. Not only this but it is also reported that it has also recommended resources to healthier white people before recommending the same resources to sicker Black people.

Language-generation algorithms too display racist and sexist ideas as they are usually developed on the language of the internet, including the hate speech, misinformation, and disinformation present on various social media platforms. Now as per the latest study, researchers are proving that the same can be said for the image-generation algorithms as well.

To highlight these biases in the AI algorithms, the paper’s US-based authors, CMU’s Ryan Steed and George Washington University’s Aylin Caliskan, for some reason showed the algorithm a head shot picture of the Democratic congresswoman Alexandria Ocasio-Cortez, from one of her photoshoots in which she was wearing business attire. To their surprise, the software automatically recreated her full body pictures in various poses, the majority of which was in a bikini or a low-cut top.

Why was the algorithm choosing bikini pics?

This could be simply understood by just surfing pictures of women on the internet. The internet is full of pictures of scantily clad women. Through the availability of these pictures in a gigantic number, AI now probably has learned “a typical picture” of “how a woman looks like” by consuming an online dataset that contained lots of pictures of half-naked women. This study is a true example of what you sow, you reap, and is also a reminder for us that human biases are baked in algorithms.

Through this study, it was revealed that the algorithm associates male and female faces with body types and clothes. Racial biases were also learned by the image-generation algorithm. In another test where the algorithm had to determine whether a person was carrying a weapon or a tool, the algorithm was more likely to suggest that Black individuals were holding the weapons.

The objective behind testing these artificial intelligence algorithms is to make people realise and create a better consciousness and management towards the use of such algorithms.

**(After some ethical concerns were raised on Twitter, the researchers had the computer-generated image of Alexandria Ocasio-Cortez in a swimsuit removed from the research paper)**