Autonomous weapons systems (AWS), often referred to as “killer robots,” are one of the most discussed topics in defence and technology today. These military systems can select and engage targets with little or no human intervention. However, while promising faster decision-making and reduced risk to soldiers, these weapon systems raise deep ethical, legal, and security concerns. If terrorist organisations gain access to these technologies, it would lead to asymmetric conflict.

The advancement of artificial intelligence (AI) has potential advantages, but it has also left challenging questions about how far autonomy in warfare should be taken. This article explores the future of autonomous weapons systems and their ethical and strategic implications.

What are Autonomous Weapons Systems?

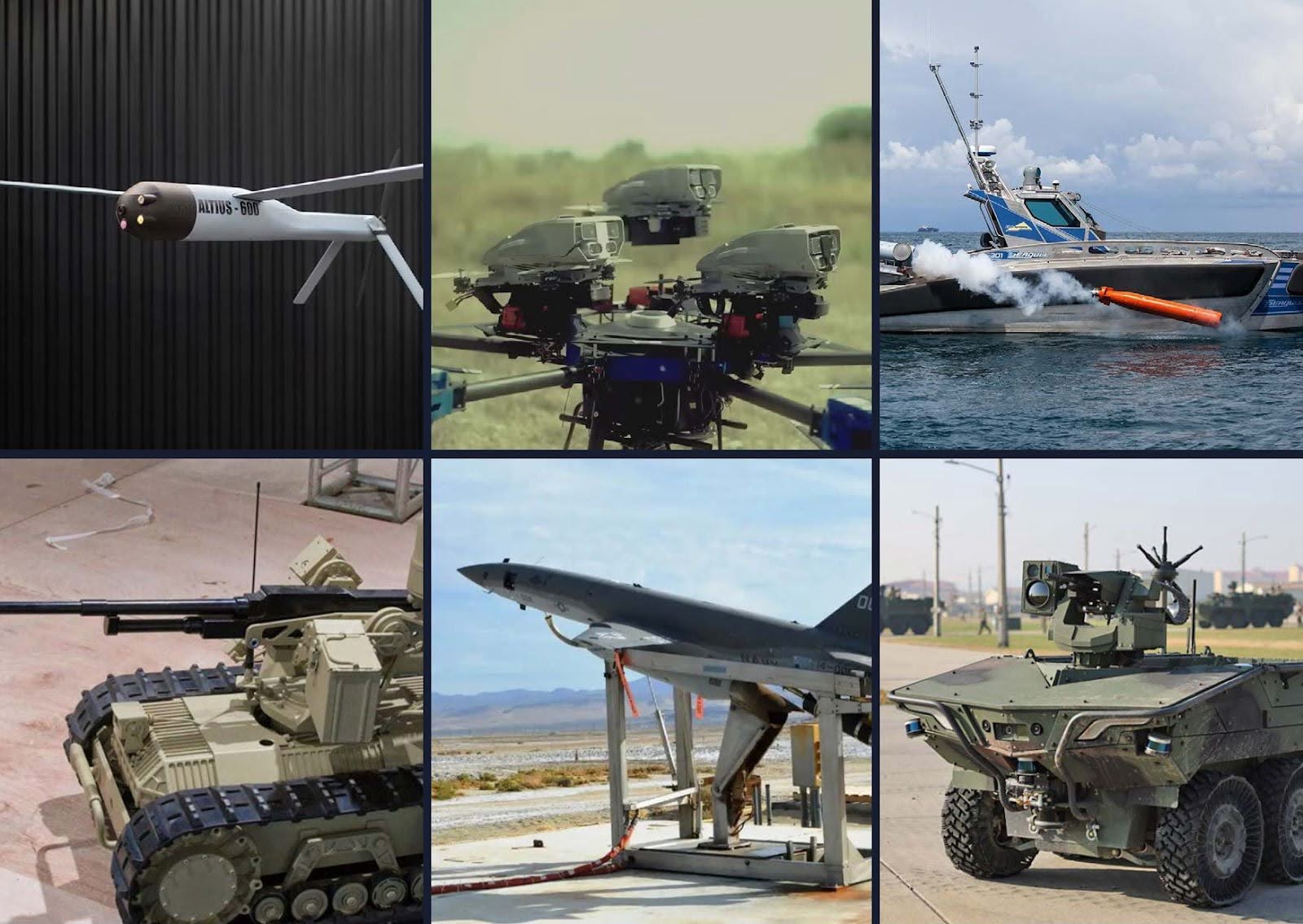

Autonomous weapons systems are machines capable of identifying, tracking, and attacking targets without direct human control. They range from simple automated defence turrets to highly sophisticated drones and robotic tanks.

AWS can make life-and-death decisions on its own, unlike remotely controlled systems, where a human operator makes the final decision to fire. This shift from “human-in-the-loop” to “human-on-the-loop” or even “human-out-of-the-loop” raises fundamental questions about accountability and morality in warfare.

The Adoption of Autonomous Weapons Systems

Many countries, including the United States, China, Russia, and Israel, are heavily investing in the development of AI-driven military systems. This technological race is backed by the belief that whoever leads in AI will hold a major advantage in future conflicts.

Autonomous drones can swarm enemy defences, self-driving tanks can operate in dangerous areas without risking soldiers, and naval systems can patrol vast regions with minimal human oversight.

The race focuses not only on military power but also on setting global norms for the use of such systems.

Ethical Challenges

Many experts raise concerns regarding the moral and ethical implications of machines making life-and-death decisions:

1. Accountability Gap

One of the biggest ethical concerns is around responsibility if an autonomous weapon kills civilians by mistake. Should it be the programmer, the military commander, or the machine itself? Current laws of war are built around human decision-makers, but AWS could make decisions faster than humans can review.

2. Risk of Civilian Harm

Even advanced AI can make mistakes, especially in complex urban battlefields where there’s a mix of civilians and combatants. A machine may misidentify a target, leading to unnecessary loss of life. Unlike humans, machines lack moral judgment or compassion, which can make such errors harder to accept.

3. The Problem of Delegating Life-and-Death Decisions

Many ethicists argue that allowing a machine to decide who lives and dies crosses a moral line. Human dignity, they say, requires that a person — not an algorithm — makes the final call on lethal force.

Strategic Implications

Beyond ethics, autonomous weapons also carry serious consequences for global security, power dynamics, and the very nature of warfare.

1. Changing the Nature of War

Autonomous weapons could make wars faster and more destructive. Since they can act without waiting for human orders, battles might occur at machine speed, leaving little time for diplomacy or negotiation. Moreover, by reducing the risk to a nation’s own soldiers, AWS could lower the political cost of war and make military action more tempting. This creates a dangerous feedback loop, where more wars are fought more quickly with less public scrutiny.

2. Global AI Arms Race

The development of AWS risks triggering a global arms race. If one nation gains an advantage, others will try to catch up, potentially leading to widespread deployment of systems with minimal safeguards. This competitive race increases instability and raises the risk of accidental escalation.

3. Cybersecurity Risks

Autonomous weapons rely on software and networks, making them prone to hacking. A cyberattack could hijack control systems, turn weapons against their operators, or spark unintended conflicts. The more we automate, the greater the risk that a single breach could have catastrophic consequences.

Navigating the Threat

The risk posed by the rapid spread of autonomous weapon systems into the hands of terrorist organisations and extremists can be curbed through various measures.

1. Strengthening International Regulations

Global rules must clearly define autonomous weapons and set binding limits on their use. Strong treaties can help ensure accountability, establish ethical standards, and prevent nations from bypassing moral responsibilities.

2. Enhancing Detection and Prevention

Intelligence agencies should develop advanced tools to detect and disable autonomous weapons early. This will help stop their deployment by terrorist groups and prevent them from causing harm.

3. Promoting Ethical AI Development

AI developers must embed ethical considerations and fail-safes into every system. Similarly, oversight and testing should ensure that humans remain in control of all lethal decisions.

4. Fostering Global Cooperation

Since this threat is global, countries must share intelligence, collaborate on research, and coordinate efforts to prevent misuse and proliferation of autonomous weapons technology.

Conclusion

Autonomous weapons systems may appear to promise precision and efficiency, but they threaten to undermine human control over one of the most serious decisions possible: that is, taking another life. The world stands at a crossroads. If we allow machines to decide who lives and dies, we risk turning warfare into a fully automated process, lacking morality or accountability. The future of humanity demands that we draw a line and always keep some decisions for humans.

Article by Gayatri Sarin