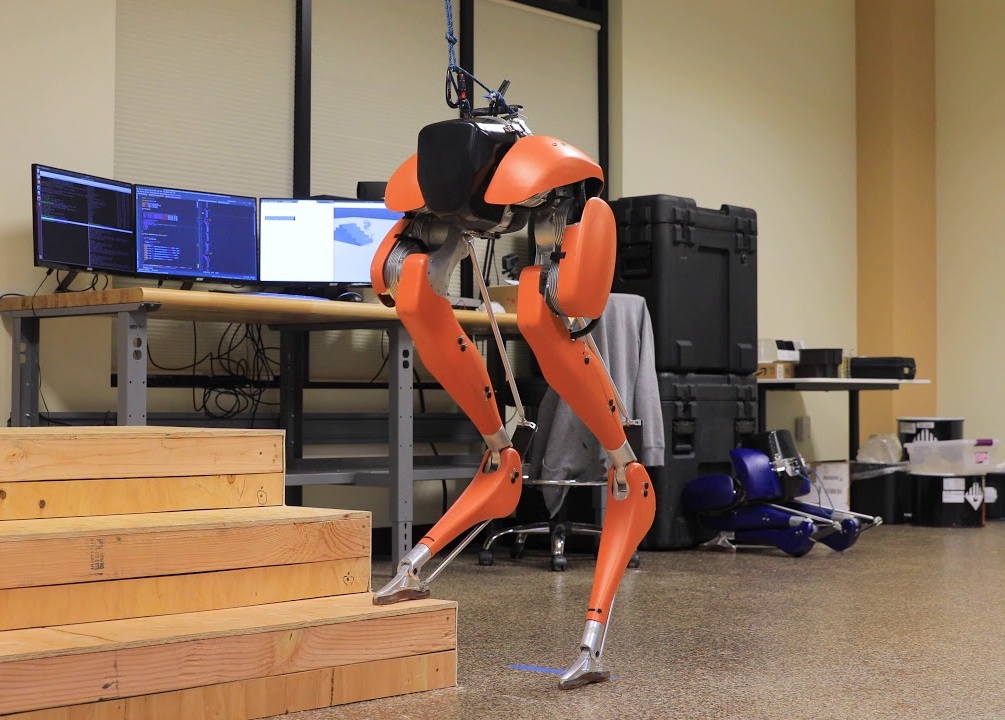

Cassie, a pair of robotic legs has taught itself using reinforcement learning, a training technique that power AIs to learn complex behavior via trial and error. Reinforcement learning is used for the first time to teach the two-legged robot how to walk from scratch.

All those viral videos that are seen by millions on social media where an Atlas robot developed Boston Dynamics have raise expectations on what robots can do. Atlas showing standing on one leg, jumping over boxes, and dancing all these movements need heavy hand-tuning.

“These videos may lead some people to believe that this is a solved and easy problem,” “But we still have a long way to go to have humanoid robots reliably operate and live in human environments.” said says Zhongyu Li at the University of California, Berkeley, who worked on Cassie project to MIT Technology Review.

At present, Cassie is only learning to walk but can’t perform a dance or other feats like Atlas. But teaching a robot to learn walking itself is a step forward to developing such robots to further handle a wide range of terrain and other complex situations.

Learning limitations

The developing team used reinforcement learning to train bots to walk inside the simulation, the main issue lies in performing this ability in real life. Differences between the simulated physical laws inside a virtual environment and laws outside the real world lead to failures.

The way friction works between the feet of a robot and the ground often leads to big failures. While heavy robots can often lose balance and falls off if it slips slightly while walking or performing other any feats.

Problem’s solution

To fight the issues of physical laws different in virtual and real life, the team at Berkely used two levels of virtual environments. At first, a simulated version of the robot learned to walk by using a large existing database of robotic movements. Then the simulation was transferred to a second virtual environment that has physical laws very close to the real world.

Real-life Cassie was able to walk using the model learned in the double simulation without any extra fine-tuning. The robot can walk actress rough terrain, slippery terrain, carry unexpected loads, and recover quickly after being pushed.